“Move fast, but think twice.” The ethics line AI coders can’t ignore. Well, if something is available for free doesn’t mean people should use it unethically. Ethics separates humans from animals. As AI accelerates software development, it also raises challenging questions: what should we build, what data should we use, and how safe is the code AI generates? That’s the line we need to draw, together.

Considering ethics in development was never urgent, but it is now, because no-code is now possible. Non-technical people are also developing software. When a vast number of people use something efficiently, some of them try to misuse that for their personal benefit and pleasure.

However, people who don’t want to harm others through their software also end up doing so because they lack knowledge on how to adhere to ethical standards while developing software. We have covered these areas in our blog, so you can avoid mistakes, even by accident, while developing software.

The Ethics of AI in Software Development

Knowing about some research and some rules related to AI use will help you understand what the government and organizations are doing, or not doing anything at all.

1. The new reality: AI is inside the dev stack

AI can perform various tasks nowadays, such as writing tests, finding bugs, and even remembering your on-screen activity. So, whether you are watching serials, searching for a cure to your disease, or planning a trip, your phone knows everything and recommends products based on your activities.

But it can create risk by breaching customers’ personal information. Developers can achieve this by tweaking the code in a particular way. A 2025 Checkmarx study found that 81% of companies knowingly ship insecure code, and 34% report that over 60% of their code is generated by AI. Don’t you think it’s risky? It can be a “perfect storm” without stronger governance.

2. Law and order: the EU AI Act is here

The EU AI Act formally entered into force Aug 1, 2024. Key parts are already in effect. For example, some prohibitions and AI literacy requirements from February 2025, GPAI model duties from August 2025. And, high-risk AI rules phase in by 2027. If you ship software to the EU, ethics is not optional; it’s essential.

3. Practical compass: NIST’s AI Risk Management Framework

NIST has developed a framework to manage issues related to the use of AI by individuals and organizations. The framework was released in 2023 to handle AI risk management. It provides teams with a shared approach to map, measure, and manage AI risks throughout the lifecycle. They track the design to ensure the ethical use of AI. It’s voluntary, but becoming trustworthy in the era of AI.

4. The code problem: “helpful” suggestions, hidden flaws

As we discussed above, some software developers are often unaware of the ethical boundaries that algorithms can cross. They do what they have been told to do. But it should not be an excuse to develop software for the wrong reasons or without following ethics. You will be surprised to know that many AI code tools often produce insecure patterns because they are designed with bad intentions or are not thoroughly tested.

- Analysis of Copilot-generated snippets revealed that around 33% of Python/JavaScript samples had security weaknesses across dozens of CWE categories.

- Policy groups warn about three risks: insecure output, models that can be attacked, and feedback loops that spread bad code.

So, AI can boost productivity, but it can also normalize insecure defaults if we don’t check its work. Some teams and organizations do it, but most of the time, they go unnoticed by software users.

5. Privacy flashpoint: the Recall controversy

Do you remember Microsoft’s Recall feature? It was meant to help users by taking regular screenshots so they could remember what they did on their computer. However, it quickly raised concerns because private details, such as passwords and credit card numbers, were also being captured.

After backlash, Microsoft paused the rollout and reintroduced it with filters. Still, tests showed sensitive data slipping through. Even the Brave browser now blocks Recall by default. It’s a clear example of how convenience can clash with user privacy.

So, Where Do We Draw the Line?

After reading the above points, you might be thinking about what to do and what not to do. Here are some ways to find out. For the best results, you can try them out.

1. User first, Always

If you keep your audience in mind, chances of mistakes can be reduced, provided you are not developing it for the audience who have the wrong intention. Like, if an AI tool is built to genuinely help users manage tasks, it will gain trust. But if it secretly collects data to sell or manipulate choices, users will feel betrayed. Ethics in AI means asking: “Does this decision truly benefit the user?” because long-term trust is worth more than short-term gains. So, don’t collect what you can’t protect.

2. Human in the Loop for Safety-Critical Code

Even if AI can manage several tasks and automate them effectively, humans are still needed to check whether everything is working correctly. Without humans in the loop, anything unpleasant and dangerous can happen if a single glitch occurs in a machine, device, or app.

So, treat AI code like code from a junior hire. Review and test it thoroughly, and scan it before merging. This is because many studies have shown that risk is real when AI is left alone to complete a task.

3. Document Model Use

Who is your audience? What are the features of your software? How will it help the audience? What are the steps to use the software? These are only a few questions; you should answer all the user concerns in your software documentation. It is because keeping the record of work helps new developers working on the same project without wasting time. They can start working from where you left off. It also reduces the chances of errors.

Moreover, track prompts, model versions, and where AI touched code and product text. This makes the process clear and repeatable for future work.

4. Consent and Context

If an AI tool collects personal information, such as screen activity, typing, or other data, users should have complete control over it. It should only work if users give consent. However, clearly explain what you want users to do and why you are collecting the data. And they can turn it off at any time they want. The Microsoft Recall issue showed why this is so important, because people don’t like surprise tracking.

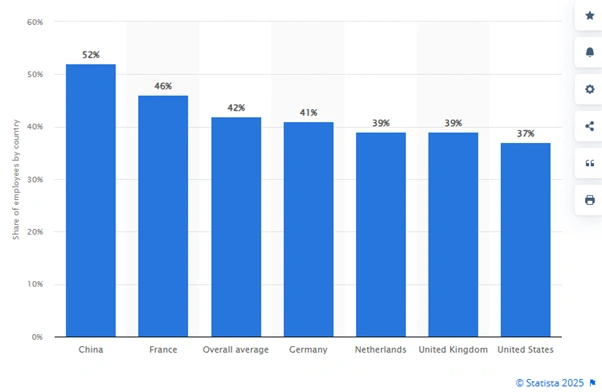

Even some employees have said that their companies have experienced AI-caused ethical problems.

5. Bias and harm checks

You might have noticed that when you search for anything related to Elon Musk on Grok, it doesn’t reply negative about him. It is because the tool is designed and programmed in such a way. Similarly, some AI tools do not answer exactly what can help you make a decision quickly, but rather confuse you. They either tweak the answer when it is specifically related to politicians, famous personalities, and other influential people, or give a generic answer. It should not happen to your tool; for this run fairness or abuse tests before launch. EU AI rules push this mindset, and it’s simply the right thing to do.

-Drawing the Line Together-

If you try to be ethical alone while creating algorithms, it can help, but not entirely, because other members are also working on the project. Therefore, it requires contributions from all those working on the project, such as AI Model creation or software development. AI in development has become an inevitable part of the process, but without ethics, it risks eroding trust. From privacy debates like Microsoft’s Recall to biases hidden in algorithms, you read about how innovation can cross lines when users aren’t put first.

So, the truth is, there is no single line. There is a shared responsibility. Developers, businesses, and even users must keep asking: “Does this solve a problem without creating a bigger one?”

As AI continues to shape the future, the question isn’t whether we use it, but how we use it responsibly.

And maybe the real test of good AI isn’t just how smart it is, but how human it feels in its fairness.

So, where do we draw the line?

Right where technology stops respecting people, and we refuse to let it cross.